Exploring the options of sharing APIs in p2p environments

Imagine an API that doesn’t live on a server, that you don’t need an internet connection for, and that can be duplicated, modified and improved on by anyone. Say hello to my latest brainfart: peer-to-peer APIs! Or better yet: the pAPI, a system which lets people share their own API with other people.

Although building a peer-to-peer API is certainly not a trivial task, peer-to-peer APIs can open up a world of user-hosted systems. These systems can share information and functionality without the need of a centralised server or cloud hosting service. Besides this, leveraging a peer-to-peer system means that we get additional benefits, like resilience against censorship and failure, content persistence and high availability, for free. Let’s dive in!

Hold on, hold on. What is this peer-to-peer thing?

Through the years we have become accustomed to centralised services like Facebook or Google. Trusting these 3rd parties leads to several issues that are inherent to centralised systems. Contrarily, peer-to-peer networks and systems allow users to host and manage their own content, removing the need to trust a 3rd party.

You think your data is safe on the servers of these big companies? You might be wrong. While these servers are protected very well against malicious users that want to steal your data, when it comes down to paying users that want your data, anything goes — as Facebook proved last month in the Cambridge Analytica debacle.

Vice versa, when the Spanish government blocked pages that informed voters about voting booths locations for the Catalan independence referendum of 2017, the Catalan government used IPFS so that peers could host the content on their own machine. This way, voting booths information was still available to the Catalan citizens.

Besides protecting users against censorship, peer-to-peer systems offer several other benefits. Users host their own content, meaning they don't need to trust any 3rd parties with their private information. Another advantage of managing your own content is that malicious attackers don't have a centralised point of attack anymore. Since content is distributed, breaching a single node doesn't expose content of any other users.

Sounds great in theory...

This all sounds great of course, but how about some practical applications? For us as front-end developers the browser is our favourite playground. Thanks to WebRTC we can finally connect to a user’s browser without needing to install any plugins or desktop applications. An example: the torrent protocol is now available for Node.js and the browser through the WebTorrent client. With WebTorrent, users can share files between them directly: a straightforward user experience, without relying on a server that would otherwise store your files who knows where & for how long.

Another exciting example: IPFS is a technology that enables users to retrieve content and host it themselves. IPFS is developed by Protocol Labs and aims to some day replace the HTTP protocol. IPFS lets people host and distribute content, which is reachable using content addressing based on a hash of the content itself. If you think putting your content online nowadays is easy, IPFS will blow your mind.

Ok, but what about sharing an API?

Sharing content is pretty great — after all it was what the web was invented for — but in 2018 we can do better than just share content, right?

What if besides sharing content we can also share functionality? What I mean by this is that besides distributing a webpage we also want to be able to add dynamic content from others on our pages. Currently, to do this we still would need to connect to centralised API servers... But you don’t want to connect to centralised services anymore — since you’ve read this far, you must be an enthusiast of the decentralised web — so how can we fix this? This is where the concept of ‘peer-to-peer’ comes in.

Considering the fact that APIs are written in any plain text programming language, we can calculate a cryptographically strong hash of this API. This way when a peer decides to clone an API, they are sure that the cloned API is indeed the API that she or he intended to clone and not some malicious modification of the original code.

To define the interface that is offered by the API we could use an OAS/Swagger-like format that defines the possible interactions and the required pre- and post-conditions of the API.

After a peer clones an API, the API can be backed by the peer’s own data — or the peer can decide to not only clone the API, but also the data that the API is backed by. Again, the authenticity of the data can be guaranteed using a cryptographic hash that is unique for the dataset.

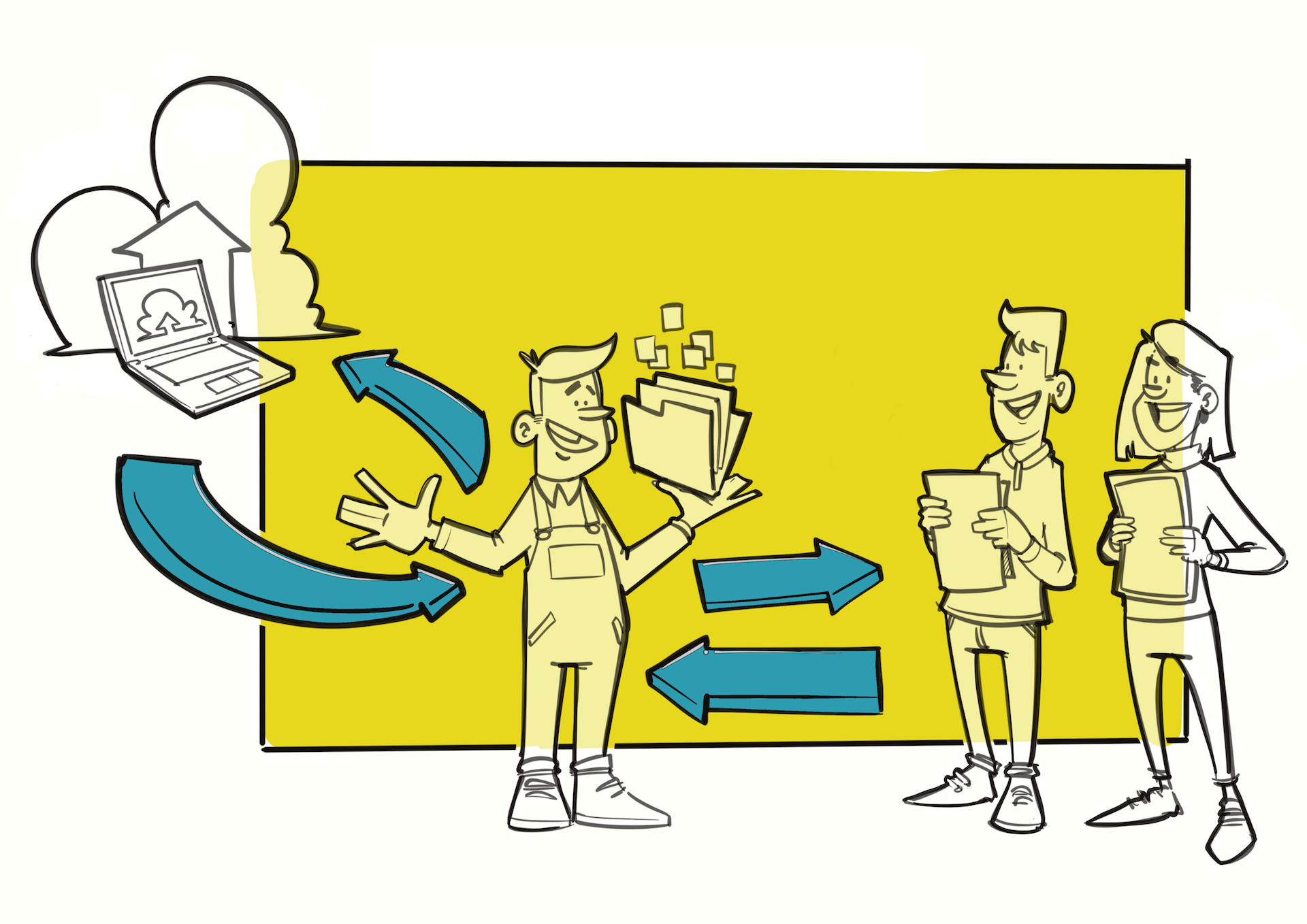

To clarify this, here are 2 illustrations:

Casper clones an API including the data that is offered with the API. After cloning and the initial setup are done, other peers can connect to Casper and enjoy the functionality offered by the API.

Again Casper clones an API. But this time, Casper decided he has his own data that is more useful than the original dataset. After cloning the API he configures his system to use his own data. This can be a way to offer 3rd parties information about yourself that you actually want to share, instead of Facebook doing it behind your back.

Wow, I’m in!

Currently I'm working on a draft version of a pAPI system using libp2p and WebRTC. Since it’s pretty complex to share "generic" APIs between peers I'm focusing on finishing this project by the end of summer 2018. So hopefully my next post will be An Introduction to pAPIs!

Some links & thanks

- For a more detailed look at the benefits of peer-to-peer systems and the differences when compared to current systems, read this great article by Chris Dixon.

- To learn more about IPFS check out this talk by one of the developers of IPFS.

- Visit this list of awesome applications leveraging the benefits of the decentralised web.

- Thanks to my colleague Lodo for the awesome illustrations in this post!